- Home

- Media Center

-

Events

- Wuzhen Summit

- Regional Forums

- Practice Cases of Jointly Building a Community with a Shared Future in Cyberspace

- World Internet Conference Awards for Pioneering Science and Technology

- The Light of Internet Expo

- Straight to Wuzhen Competition

- Global Youth Leadership Program

- WIC Distinguished Contribution Award

- Membership

- Research & Cooperation

- Digital Academy

-

Reports

- Collection of cases on Jointly Building a Community with a Shared Future in Cyberspace

- Collection of Shortlisted Achievements of World Internet Conference Awards for Pioneering Science and Technology

- Reports on Artificial Intelligence

- Reports on Cross—Border E—Commerce

- Reports on Data

- Outcomes of Think Tank Cooperation Program

- Series on Sovereignty in Cyberspace Theory and Practice

- Other Achievements

- About WIC

- 中文 | EN

Collection: Hierarchical content perception of visual media

Introduction

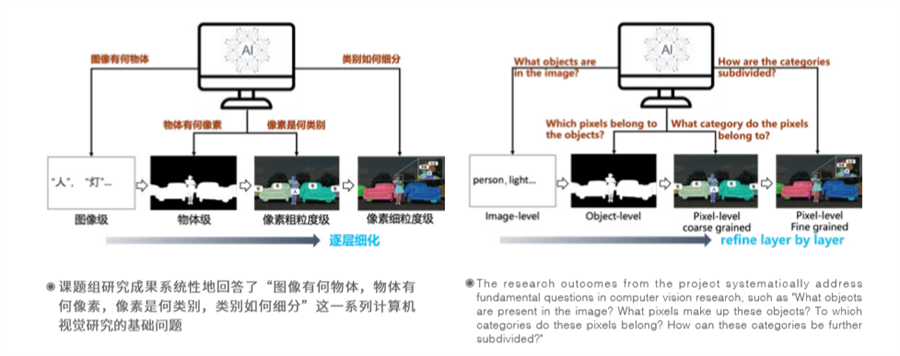

Simulating the cognitive mechanisms of the brain to achieve efficient and hierarchical perception of visual content remains a formidable challenge in the field of computer vision. This project explores how to endow computers with the capability for hierarchical perception. The series of research outputs have amassed over 6,000 citations, playing a crucial guiding role in the subsequent evolution of related domains.

The project has developed a progressive theory and solutions for visual perception, ranging from coarse to fine-grained levels

The Digital Media Information Processing Team at Beijing Jiaotong University, in partnership with the Media Computing Laboratory at Nankai University, centered their research on hierarchical content perception in visual media. The initiative delves systematically into the theories and methodologies related to hierarchical perception of visual content, establishing a progressive framework that spans from image-level and object-level to pixel-level perception, both in coarse and fine-grained resolutions. This research addresses a series of questions such as "What objects are in the image? What pixels constitute the object? What categories do these pixels belong to? How can these categories be further subdivided?" The scope of the research encompasses image-level multi-object perception, object-level spatial perception, pixel-level coarse-grained perception, and pixel-level fine-grained perception. Eight seminal papers have been published in international journals and conferences, amassing over 6,000 citations on Google Scholar. Some of these papers represent pioneering contributions in their respective research directions and have significantly influenced subsequent developments in related fields. The work has garnered attention from Turing Award winners and over 100 IEEE Fellows. Additionally, some of the technologies have been integrated as standard The capabilities in hundreds of millions of Huawei smartphones.

The research clarifies the intrinsic connections among various hierarchical tasks in visual content comprehension, thereby fostering the advancement of studies in visual perception

Firstly, at the level of image-based perceptual understanding, the research outcomes elucidate the intrinsic correlation between global and local image perception. A conceptual framework, termed "Image Decomposition-Local Perception-Global Fusion", was introduced. This framework transforms multi-class perception tasks into multiple single-class perception tasks, thereby successfully overcoming the bottleneck of insufficient training samples in multi-class perception endeavors. Secondly, at the object-level perception layer, the study uncovers the mechanisms by which hierarchical features influence the detection of object pixel locations within images. A multi-scale feature fusion architecture with short connections was developed, mining the complementary information from different hierarchical features to form a structurally coherent and detail-rich object localization map. Moreover, at the pixel-level coarse-grained perception layer, the research reveals the intrinsic relationship between image-level semantic information and object localization. A computational paradigm, dubbed "Recognition-Erase Adversarial," has been proposed.

This paradigm constructs an inferential framework for transferring class semantics from the image level to the pixel level, leading to a suite of theories on coarse-grained semantic segmentation, even with imperfect annotations. Furthermore, delving even further into pixel-level fine-grained perception, the study unveils the impact mechanisms of image contextual information, high-resolution features, and edge detail on fine-grained pixel class perception. A framework for the fusion of complementary perceptual information at the pixel class granularity has been established, resolving the novel challenge of semantic class confusion among adjacent pixels at a fine-grained level. Lastly, at the pixel-level fine-grained perception layer, the research uncovers the mechanisms by which image context, high-resolution features, and edge details influence fine-grained pixel category perception. A framework is developed that integrates complementary perception information for pixel category refinement, addressing the new challenge of easily confused adjacent pixel fine-grained semantic categories.

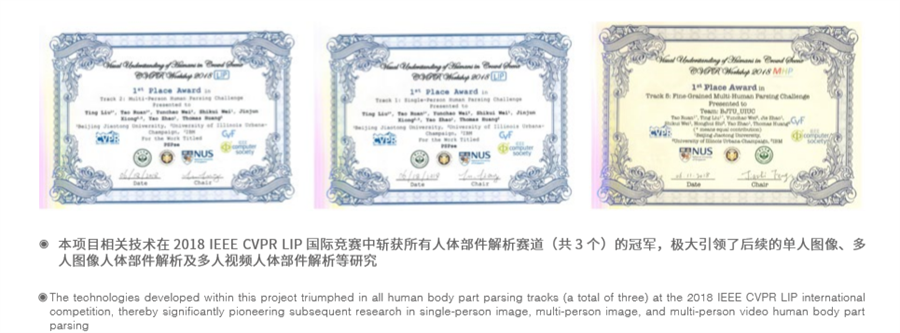

The work has garnered over 6,000 citations on Google Scholar and has been recognized by Turing Award winners and over 100 IEEE Fellows

The project's achievements, encapsulated in eight seminal papers, have garnered widespread attention from premier American institutions such as Carnegie Mellon University, as well as leading research organizations like Google and Tencent. These works have been cited by Turing Award laureate Yoshua Bengio and over one hundred IEEE Fellows, accumulating a total of 6,054 Google Scholar citations. Luc Van Gool, a Marr Prize recipient and professor at ETH Zurich, along with his collaborators, employed the project's findings to address weakly-supervised semantic segmentation tasks in their work presented at the IEEE CVPR conference, subsequently clinching the championship in that year's CVPR LID competition. IEEE Fellow Bernt Schiele and his co-authors, in their renowned Cityscapes paper at the IEEE CVPR conference, lauded the project for "exploring semantic segmentation research under various forms of weak annotation." The project's contributions to image-level class perception have for the first time elevated performance metrics to above 90% on the internationally acclaimed PASCAL dataset. Moreover, its advancements in pixel-level fine-grained perception secured the championship in all fine-grained human parsing tracks at the 2018 IEEE CVPR LIP competition.

The World Internet Conference (WIC) was established as an international organization on July 12, 2022, headquartered in Beijing, China. It was jointly initiated by Global System for Mobile Communication Association (GSMA), National Computer Network Emergency Response Technical Team/Coordination Center of China (CNCERT), China Internet Network Information Center (CNNIC), Alibaba Group, Tencent, and Zhijiang Lab.