- Home

- Media Center

-

Events

- Wuzhen Summit

- Regional Forums

- Practice Cases of Jointly Building a Community with a Shared Future in Cyberspace

- World Internet Conference Awards for Pioneering Science and Technology

- The Light of Internet Expo

- Straight to Wuzhen Competition

- Global Youth Leadership Program

- WIC Distinguished Contribution Award

- Membership

- Research & Cooperation

- Digital Academy

-

Reports

- Collection of cases on Jointly Building a Community with a Shared Future in Cyberspace

- Collection of Shortlisted Achievements of World Internet Conference Awards for Pioneering Science and Technology

- Reports on Artificial Intelligence

- Reports on Cross—Border E—Commerce

- Reports on Data

- Outcomes of Think Tank Cooperation Program

- Series on Sovereignty in Cyberspace Theory and Practice

- Other Achievements

- About WIC

- 中文 | EN

Collection: Multi-level multi-type knowledge fused deep learning fundamental methods for natural languages

Introduction

Natural Language Processing (NLP) is a cutting-edge research field in artificial intelligence. Tsinghua University and Huawei Technologies Co Ltd. have devoted a significant attention to research and establishment of the multi-level multi-type knowledge fused deep learning fundamental methods for natural languages in the past years, achieving internationally advanced and influential outcomes in academic innovation and opensource sharing.

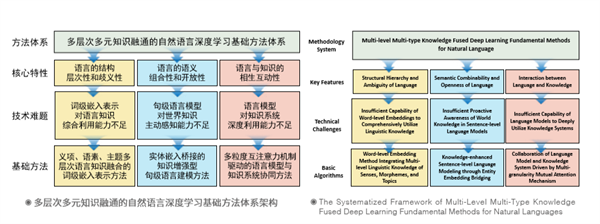

The Systematized Framework of Multi-Level Multi-Type Knowledge Fused Deep Learning Fundamental Methods for Natural Languages

A world-class multi-level multi-type knowledge fused deep learning fundamental method for natural language

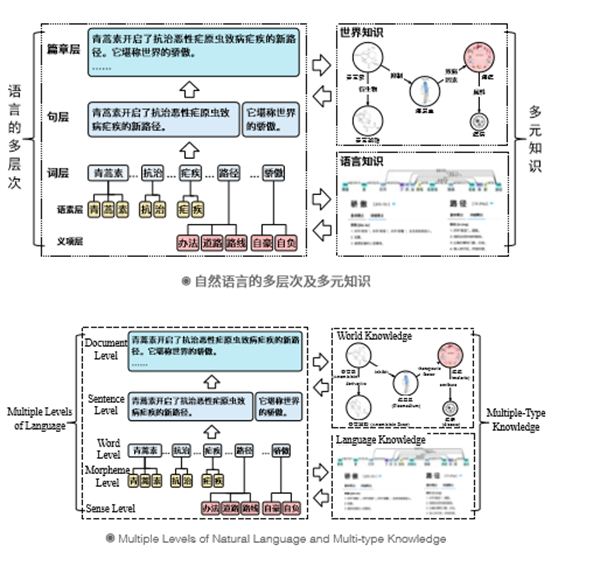

This project addresses three profound challenges: the insufficient ability of word-level embedding representations to utilize linguistic knowledge comprehensively, the inadequate proactive awareness of world knowledge by sentence-level language models, and the insufficient ability of language models to deeply utilize knowledge systems. A relatively complete multi-level multi-type knowledge fused deep learning fundamental methods for natural languages has been established. This includes the word-level embedding method integrating multi-level linguistic knowledge of senses, morphemes, and topics; the knowledge-enhanced sentence-level language modeling through entity embedding bridging; and the collaboration of language model and knowledge system driven by multi-granularity mutual attention mechanism. significant bottlenecks restricting the advancement of deep learning in NLP, significantly enhancing the fundamental capabilities of NLP models.

An internationally influential open-source system for the fusion of language and knowledge

Based on this algorithmic framework, we have released 4 open-source software on GitHub, the most influential international open-source platform, constituting a deep learning open-source system for the integration of language and knowledge. This system has garnered over 2,000 stars and has been forked more than 500 times, ranking it among the world's leading influences in the field of deep learning for natural language integration with knowledge. Notably, the forkers include tech giants like Google, Microsoft, Alibaba, Tencent, JD.com, and Baidu. Our achievement has been successfully implemented in Huawei Cloud, serving over 150 countries and regions with significant results (Currently, Huawei Cloud holds the second-largest market share in China and ranks fifth globally. It serves more than 150 countries and regions, boasts over 30,000 partners worldwide, accommodates 2.6 million developers, and has listed more than 6,100 applications in its cloud marketplace, making it highly influential on the global stage).

A series of internationally recognized academic papers on the fusion of language and knowledge

The five representative papers of this achievement have garnered considerable attention from the international academic community, with a total of 2,697 citations on Google Scholar, the highest single paper being cited 1,250 times. For example, one of the representative papers from this achievements stood out prominently in terms of academic influence among over 660 papers published in the top conference ACL 2019. Li Fei-Fei, a fellow of the American academies, pointed out in her significant paper that our achievement had the best performance in semantic evaluation at the time. Tomas Mikolov, the proponent of the renowned Word2Vec model, also mentioned our achievement in his seminal work. The team of Peter Szolovits, Fellow of the National Academy of Medicine and a professor at Massachusetts Institute of Technology (MIT), adopted one of our achievements, ERNIE, as the foundational framework for their research. They fully integrated it with medical knowledge bases, resulting in the development of the c-ERNIE medical model and the medical domain intelligent question-answering system M-cERNIE. Other notable citers include Dan Jurafsky, a fellow of the American Academy of Arts and Sciences and a professor at Stanford University; Hinrich Schütze, the chair of ACL2020 and a chaired professor at the University of Munich; Christopher Manning, an ACM/AAAI fellow and director of the Stanford Artificial Intelligence Laboratory (SAIL); and Yuji Matsumoto, an ACL fellow and a renowned NLP scholar in Japan.

The World Internet Conference (WIC) was established as an international organization on July 12, 2022, headquartered in Beijing, China. It was jointly initiated by Global System for Mobile Communication Association (GSMA), National Computer Network Emergency Response Technical Team/Coordination Center of China (CNCERT), China Internet Network Information Center (CNNIC), Alibaba Group, Tencent, and Zhijiang Lab.